- blog

- Scientists

- Peers

- General

- All

•

•

•

•

-

TIJDELIJKE STOP SIGNIFICANT HELP

Voor iedereen: de beste wensen voor 2021!Ik hoop dat het een jaar wordt waarin we de goede dingen weer op de rit krijgen en de minder goede dingen blijvend de deur uit doen. Voor mij wordt het een jaar met veel thuiswerken (valt voor mij onder de goede dingen!) en zonder vliegreizen naar congressen (niet zo nodig eigenlijk!). Oftewel: vollop tijd om mijn proefschrift af te schrijven.Ik mag in 2021 fulltime werken om mijn promotie af te ronden en stop daarom een jaar met mijn adviesbureau Significant Help.

-

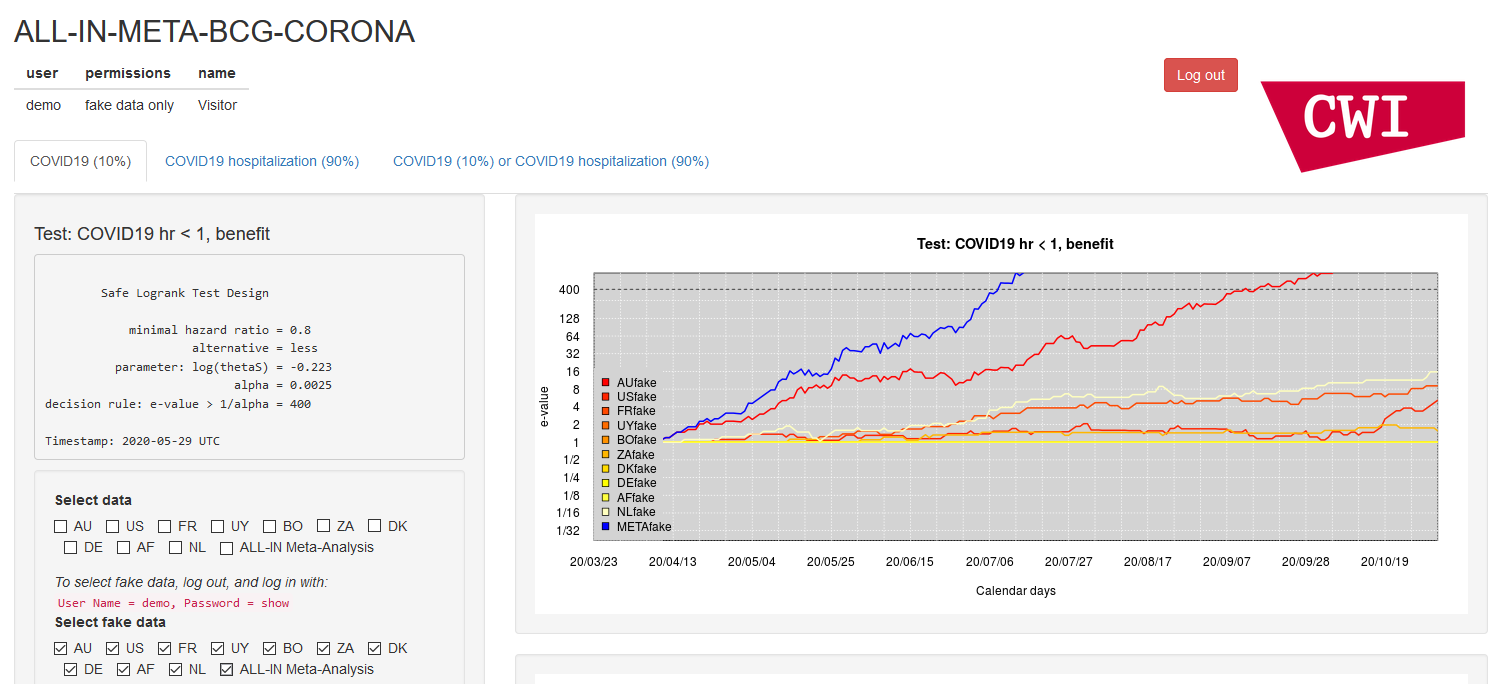

[General] Interview in Het Parool en New Scientist

Een vergroting van de foto hierboven: Het Parool.JPG.Gisteren verscheen in de zaterdagkrant van Het Parool en op de website van New Scientist een interview met mijn promotiebegeleider Prof. Peter Grünwald en mij. Heel veel dank, Fenna van der Grient, voor het mooie verhaal dat je hebt geschreven!Het stuk gaat over ALL-IN meta-analyse; het sluitstuk van mijn promotieonderzoek. ALL-IN meta-analyse staat voor Anytime, Live and Leading INterim meta-analyse en is een statistische methode om tussentijds resultaten van gerandomiseerde studies te monitoren en samen te voegen. Hiermee kunnen we sneller beslissen of een nieuwe behandeling of vaccin werkt. Het wordt gemakkelijker voor studies om samen te werken als ze niet op voorhand samen zijn ontworpen. En hopelijk verdwijnen er daardoor minder niet afgeronde studies in een la. Grootschalige toepassing van deze methodes kan er zelfs voor zorgen dat we beter tussentijds weten welke onderzoekslijnen extra geld nodig hebben om hun studies uit te breiden, en welke teleurstellen en beter kunnen stoppen.Lees het stuk op de website New Scientist hier, het stuk op de website van Het Parool hier, en een foto van de gedrukte krant hier: Het Parool.JPG.Mijn persoonlijke terugblik op het ALL-IN-META-BCG-CORONA dashboard dat we hebben gemaakt is te vinden in deze blogpost (in English).

-

[Personal] COVID-19 trial dashboard

Could all clinical trial results be monitored in a dashboard like this? We hope so: Decisions on efficacy and futility can be taken as soon as possible. Collaboration is made straightforward. Small studies can easily contribute and might be less often file-drawered. And for decisions of (funding) new research, a live account of the evidence might improve the assessment of research that needs extra trials.We call it ALL-IN meta-analysis: Anytime, Live and Leading INterim meta-analysis.Yet for such live monitoring of interim trial results, new statistical methodology had to be developed. This is what we did since the start of the corona pandemic. We went 'all-in' on developing methods for clinical trials with time-to-event data (also known as: survival data). Ideas for live monitoring of randomized trials were in the works for ten years (e.g. already in Prof. Peter Grünwald's inaugural lecture in 2009). And for me, three years of (parttime) PhD research were exactly enough to know that we could develop this for COVID19 clinical trials. So in an amazing effort, we went from initial mathematic ideas, to software, to simulations, to tutorials, to mathematical proofs, to a paper that is soon to appear. I'm very grateful to dr. Alexander Ly for working so hard with me on this, and to Muriel Perez for joining the mathematical effort.And as the proof of the pudding: a wonderful collaboration with the Utrecht and Nijmegen medical hospitals UMC Utrecht (Prof. Marc Bonten) and Radboud UMC (Prof. Mihai Netea), on trials studying the BCG vaccine as a general immune system booster. I want to specifically acknowledge dr. Henri van Werkhoven, the trial statistician of the Utrecht/Nijmegen trials on the BCG vaccine, for his confidence and nerve amid the chaos of the initial months of the pandemic. The many conversations I had with him really fine-tuned the ALL-IN approach and its presentation. He is now the Principal Investigator of ALL-IN-META-BCG-CORONA, of which I am the meta trial statistician.You can have a look at a demo version of our dashboard at https://cwi-machinelearning.shinyapps.io/ALL-IN-META-BCG-CORONA/LoginUser name: demoPassword: showDetailed materials of ALL-IN-META-BCG-CORONA can be found on https://projects.cwi.nl/safestats/. A preprint paper and more links to blogposts will appear soon.

-

[General] Website Platform for Young Meta-Scientists (PYMS)

June 17th, PYMS launched their website! PYMS stands for Platform for Young Meta-Scientists. I'm a proud member of this super cool group of young researchers!https://www.metaphant.net/Check out this website!

-

[Peers/Scientists] VVSOR Covid-19 protocol review

Statistici slaan landelijk de (virtuele) handen ineen voor de kwaliteit van klinisch onderzoek naar covid-19! Optimaal statistisch ontwerp kan betekenen dat we sneller en met meer betrouwbaarheid weten hoe we covid-19 symptomen kunnen behandelen en voorkomen.In zoommeetings met biostatistici uit het hele land hebben we een review panel kunnen opzetten met 35 statistici vanuit zowel universitair medisch centra als het bedrijfsleven!Meer informatie op https://www.vvsor.nl/articles/covid-19-protocol-review/ en in deze blogpost.

-

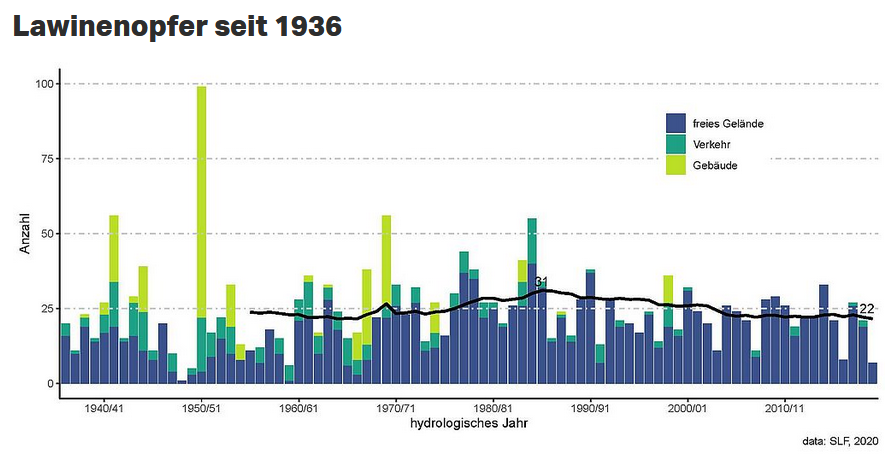

[General] Hoe risicovol is toerskiën?

Bron grafiek: SLF (lawineslachtoffers in Zwitserland)"Hoe risicovol is toerskiën?" over die vraag schreef ik een stuk voor de Hoogtelijn van februari 2020: Judith ter Schure (2020) Hoe risicovol is toerskien.pdf.Dit tijdschrift ploft vandaag op de mat bij leden van de NKBV en is ook online te lezen (p. 24, 25, 27): de online editie van de Hoogtelijn 2020-1.In deze blogpost vind je links naar het bronmateriaal waarop ik mijn artikel heb gebaseerd.

-

[Peers] Time in Meta-Analysis

Workshop Design and Analysis of Replication Studies: ReproZürichThis was a very nice workshop with great speakers and interesting discussion. Please find my own abstract and slides below.Scientific knowledge accumulates and therefore always has a (partly) sequential nature. As a result, the exchangeability assumption in conventional meta-analysis cannot be met if the existence of a replication — or generally: later studies in a series — depends on earlier results. Such dependencies arise at the study level but also at the meta-analysis level, if new studies are informed by a systematic review of existing results in order to reduce research waste. Fortunately, studies series with such dependencies can be meta-analyzed with Safe Tests. These tests preserve type I error control, even if the analysis is updated after each new study. Moreover, they introduce a novel approach to handling heterogeneity; a bottleneck in sequential meta-analysis. This strength of Safe Tests for composite null hypotheses lies in controlling type I errors over the entire set of null distributions by specifying the test statistic for a worst-case prior on the null. If for each study such a (study-specific) test statistic is provided, the combined test controls type I error even if each study is generated by a different null distribution. These properties are optimized in so-called GROW Safe Tests. Hence, they optimize the ability to reject the null hypothesis and make intermediate decisions in a growing series, without the need to model heterogeneity.A novel approach to meta-analysis testing under heterogeneityPlease find my slides here:Judith ter Schure - Time in meta-analysis.pdfAnd visit the website for abstracts and slides of other speakers:https://www.reprozurich.org/

-

[Peers] How can meta-research improve statistical research and practice?

Parallel Session at the Tilburg Meta-Research DayBelow the abstract and my take-away of the discussion.How can meta-research improve statistical research/practice?Meta-research has spurred two opposing perspectives on science: one treats individual studies and study series as two separate publication types (e.g., in Ioannidis' 2005 paper 'Why Most Published Research Findings Are False') and one treats individual studies as part of a series that needs to be informed by that series (e.g., in the Chalmers et al. 2014 paper 'How to increase value and reduce waste when research priorities are set'). These perspectives decide what statistical methods are valid, both at the individual-study level and at the meta-analysis level. Could meta-research also inform which perspective is most appropriate and improve the corresponding statistical research and practice?Take-awayIt varies a lot per field whether scientists in their experimental design actually feel like they contribute to an accumulating series of studies. In some fields awareness exists that the results of an experiment will someday end up in a meta-analysis with existing experiments, while in others scientists aim to design experiments as 'refreshingly new' as possible. In a table that shows series of studies together in one column if they could be meta-analyzed, this latter approach shows scientists who mainly aim to initiate new columns. This pre-experimental perspective might be different from the meta-analysis perspective, in which a systematic search and inclusion criteria might still force those experiments together in one column, even though they weren't intended that way. This practice might erode trust in meta-analyses that try to synthesize effects from too different experiments.The discussion was very hesitant towards enforcing rules (e.g. by funders or universities) on scientists in priority setting, such as whether a field needs more columns of 'refreshingly new' experiments, or needs replications of existing studies (extra rows) so a field can settle on a specific topic in one column with a meta-analysis.In terms of statistical consequences, sequential processes might still be at play if scientists designing experiments know about the results of other experiments that might end up in the same meta-analysis. Full exchangeability in meta-analysis means that no-one would have decided differently on the feasibility or design of an experiment had the results of others been different. If that assumption cannot be met, we should consider studies as part of series in our statistical meta-analysis, even without forcing this approach in the design phase.For a full summary of the discussion, see this document:Judith ter Schure - Tilburg Meta-Research Day Parallel Session Statistics summary.pdf

-

[Peers] Talks Talks Talks! :)

Inspired by a 'How to talk about mathematics' presentation by Ionica Smeets in August last year, I created this outline slide for my talk at University of Amsterdam. Looking forward to this week, with three talks: in Tilburg on Tuesday, in Amsterdam (UvA) on Friday and again in Amsterdam (CWI) on Saturday.Tuesday October 1th, Tilburg ColloquiumThe rules of the game called significance testing (wink wink)If significance testing were a game, it would be dictated by chance and encourage researchers to cheat. A dominant rule would be that once you conduct a study, you go all in: you have one go at your one preregistered hypothesis — one outcome measure, one analysis plan, one sample size or stopping rule etc. — and either you win (significance!) or you lose everything. The game does not allow you to conduct a second study, unless you prespecified that as well, together with the first. Strategies that base future studies on previous results, and then meta-analyze, are not allowed. Honestly reporting the p-value next to your 'I lost everything' result does not help; that is like reporting the margin in a winner takes all game. In a new round you have to start over again. No wonder researchers cheat this game by filedrawering and p-hacking. The best way to solve this might be to change the game. Fortunately, this is possible by preventing researchers from losing everything and allowing them to reinvest their previous earnings in new studies. This new game keeps score in terms of $-values instead of p-values, and tests with Safe Tests.Friday October 4th, University of Amsterdam Accumulation Bias in Meta-Analysis: How to Describe and How to Handle ItStudies accumulate over time and meta-analyses are mainly retrospective. These two characteristics introduce dependencies between the analysis time, at which a series of studies is up for meta-analysis, and results within the series. Dependencies introduce bias —Accumulation Bias— and invalidate the sampling distribution assumed for p-value tests, thus inflating type-I errors. But dependencies are also inevitable, since for science to accumulate efficiently, new research needs to be informed by past results. In our paper, we investigate various ways in which time influences error control in meta-analysis testing. We introduce an Accumulation Bias Framework that allows us to model a wide variety of practically occurring dependencies including study series accumulation, meta-analysis timing, and approaches to multiple testing in living systematic reviews. The strength of this framework is that it shows how all dependencies affect p-value-based tests in a similar manner. This leads to two main conclusions. First, Accumulation Bias is inevitable, and even if it can be approximated and accounted for, no valid p-value tests can be constructed. Second, tests based on likelihood ratios withstand Accumulation Bias: they provide bounds on error probabilities that remain valid despite the bias.Saturday October 5th, Weekend van de Wetenschap CWIhttps://www.weekendvandewetenschap.nl/activiteiten/2019/wiskunde-en-informaticalezingen/Zie deze video van de Nacht van de Wetenschap in Den Haag vorig jaar!

-

[General] Weekend van de Wetenschap

Wiskunde- en informaticalezingen op het CWIhttps://www.cwi.nl/events/2019/open-dag-2019/cwi-open-dag-5-oktober-2019(Medische) statistiek: afwijken is het nieuwe normaalAutomatische detectie, dat willen we overal wel: verdachte personen op een vliegveld, mogelijke belastingfraude, vroege symptomen van een ziekte. Maar de techniek kan er naast zitten en ten onrechte iemand aanwijzen als terrorist, fraudeur of doodzieke patiënt. Fouten die al makkelijk door mensen worden gemaakt zijn nog veel gevaarlijker in automatische processen. Daarom is statistiek belangrijker dan ooit. Ik vertel hoe statistici kunnen meehelpen om onze samenleving veiliger, efficiënter en gezonder te maken. En waarom, ook in de statistiek, wetenschappelijk onderzoek hoognodig is.Lezingen